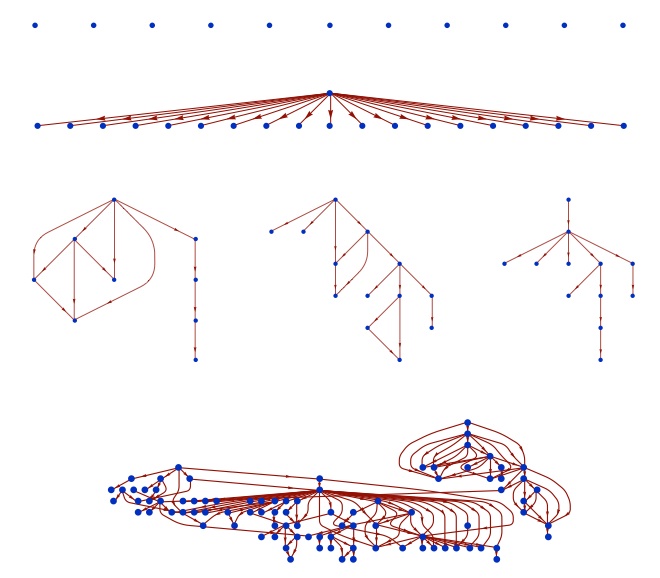

Recently there has been a spirited conversation kicked off by the publication of an article, "The Spread of Evidence-Poor Medicine via Flawed Social-Network Analysis," by Russell Lyons regarding the well-publicized work of Nicholas Christakis and James Fowler on social contagion of obesity, smoking, happiness, and divorce. The discussion has been primarily confined to the specialized circle of social network scholars, but now that conversation has spilled out into the public arena in the form of an article by Dave Johns in Slate (Dave Johns has written about the Christakis-Fowler work in Slate before). Christakis and Fowler's work has received a huge amount of attention, appearing on the cover of the New York Times magazine, on the Colbert Report TV program, and a ton of other places (see James's website for more links). Many others have made detailed comments on Lyon's article and on the original Christakis-Fowler papers. I wish to address some of the related issues raised in Slate about scientists in the media

The article seems to criticize Christakis and Fowler for their media appearances, as though this publicity is inappropriate for scientists who should be diligently but silently working in the background, leaving it up to policy makers and the media to make public commentary and recommendations. I think this criticism is not only wrong, but dangerous. Many if not most researchers do work silently in the background, shunning the spotlight and scrutiny of the media, not out of shyness or fear of embarrassment, but because of a pervasive misunderstanding of scientific uncertainty. Hard science is simply much softer than many people realize.

ALL scientific conclusions — from physics to sociology come with uncertainty (this does not apply to mathematics, which is actually not a science). A "scientific truth" is actually something that we're only pretty sure is true. But we'll never be definitely 100% sure, that's just how science works. When one scientist says to another, we have observed that X causes Y, it is understood that what is meant is, the probability that the observed relationship between X and Y is due to chance is very small. But, statements like that don't make for good news stories. Not only are they uninteresting, but for most people they're unintelligible (which is not to say that the public is stupid — the concepts of uncertainty and statistical significance are extremely subtle and often misunderstood even by well-trained scientists). So, many scientists avoid the media because we're asked to make definitive statements where no definitive statements are possible, or we make statements that include uncertainty that are ignored or misunderstood.

But we need scientists in the media. Only a fraction of Americans believe the planet is warming and 40% of Americans believe in creationism. Scientists in the media can help correct these misperceptions and guide public policy. And, maybe even more importantly, scientists in the media can make science sexy. We already live in a world where science and politics are often at odds, and in which scientists that avoid the media are often overruled by politicians that seek it out. Scientists are already wary of making public statements that implicitly contain uncertainty for fear of them being interpreted as definitive. Christakis and Fowler have done us a great service by taking the risk of making statements and recommendations in the public arena based on the best of their knowledge, by raising public awareness of the science of networks, and by making science fun, interesting, and relevant.