I was surprised this week to find an article on Big Data in the New York Times Men's Fashion Edition of the Style Magazine. Finally! Something in the Fashion issue that I can relate to I thought. Unfortunately, the article by Andrew Ross Sorkin (author of Too Big To Fail) made one crucial mistake. The downfall of the article was conflating two distinct concepts that are both near and dear to my research, Big Data and the Wisdom of Crowds, which led to a completely wrong conclusion.

Big Data is what it sounds like — using very large datasets for ... well for whatever you want. How big is Big depends on what you're doing. At a recent workshop on Big Data at Northwestern University, Luís Amaral defined Big Data to be basically any data that is too big for you to handle using whatever methods are business as usual for you. So, if you're used to dealing with data in Excel on a laptop, then data that needs a small server and some more sophisticated analytics software is Big for you. If you're used to dealing with data on a server, then your Big might be data that needs a room full of servers.

The Wisdom of Crowds is the idea that, as collectives, groups of people can make more accurate forecasts or come up with better solutions to problems than the individuals in them could on their own. A different recent New York Times articles has some great examples of the Wisdom of Crowds. The article talks about how the Navy has used groups to help make forecasts, and in particular forecasts for the locations of lost items like "sunken ships, spent warheads and downed pilots in vast, uncharted waters." The article tells one incredible story of how they used this idea to locate a missing submarine, the Scorpion:

"... forecasters draw on expertise from diverse but relevant areas — in the case of finding a submarine, say, submarine command, ocean salvage, and oceanography experts, as well as physicists and engineers. Each would make an educated guess as to where the ship is ... This is how Dr. Craven located the Scorpion.

“I knew these guys and I gave probability scores to each scenario they came up with,” Dr. Craven said. The men bet bottles of Chivas Regal to keep matters interesting, and after some statistical analysis, Dr. Craven zeroed in on a point about 400 miles from the Azores, near the Sargasso Sea, according to a detailed account in “Blind Man’s Bluff,” by Christopher Drew and Sherry Sontag. The sub was found about 200 yards away."

This is a perfect example of the Wisdom of Crowds: by pooling the forecasts of a diverse group, they came up with an accurate collective forecast.

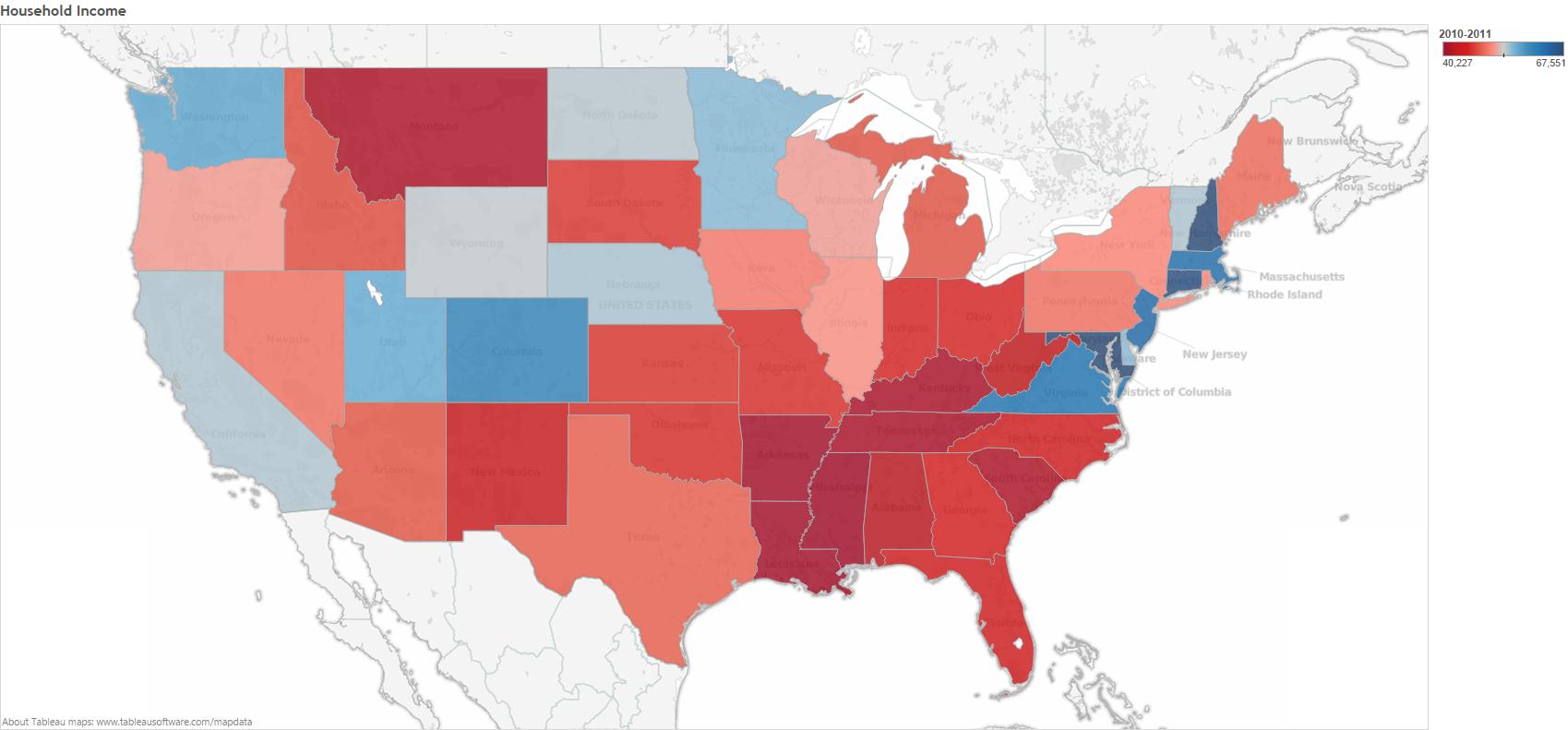

So, how do Big Data and The Wisdom of Crowds get mixed up? The mixup comes from the fact that a lot of Big Data is data on the behavior of crowds. The central example in Sorkin's article is data from Twitter, and in particular data that showed a lot of people on Twitter were very unhappy with antigay comments made by Phil Robertson, the star of A&E's Duck Dynasty. The short version of the story is that A&E initially terminated Robertson in response to the Twitter data, but Sorkin argues this was a business mistake because Twitter users are "not exactly regular watchers of the camo-wearing Louisiana clan whose members openly celebrate being 'rednecks'." He also cites evidence that data from Twitter does not provide accurate election predictions for essentially the same reason — the people that are tweeting are not a representative sample of the people that are voting. All of this is correct. Using a big dataset does not mean that you don't have to worry about having a biased sample. No matter how big your dataset, a biased sample can lead to incorrect conclusions. A classic example is the prediction by The Literary Digest in 1936 that Alf Landon would be the overwhelming winner of the presidential election that year. In fact, Franklin Roosevelt carried 46 of the 48 states. The prediction was based on a huge poll with 2.4 million respondents, but the problem with the prediction was that the sample for the poll drew primarily on Literary Digest subscribers, automobile and telephone owners. This sample tended to be more affluent than the average voter, and thus favored Landon's less progressive policies.

So, Sorkin is on the right track to write a great article on how sample bias is still important even when you have Big Data. This is a really important point that a lot of people don't appreciate. But unfortunately the article veers off that track when it starts talking about the Wisdom of Crowds. The Wisdom of Crowds is not about combining data on large groups, but about combining the predictions, forecasts, or ideas of groups (they don't even have to be that large). If you want to use the Wisdom of Crowds to predict an election winner, you don't collect data on who they're tweeting about, you ask them who they think is going to win. If you want to use the Wisdom of Crowds to decide whether or not you should fire Phil Robertson, you ask them, "Do you think A&E will be more profitable if they fire Phil Robertson or not?" As angry as all of those tweets were, many of those angry voices on Twitter would probably concede that Robertson's remarks wouldn't damage the show's standing with its core audience.

The scientific evidence shows that using crowds is a pretty good way to make a prediction, and it often outperforms forecasts based on experts or Big Data. For example, looking at presidential elections from 1988 to 2004, relatively small Wisdom of Crowds forecasts outperformed the massive Gallup Poll by .3 percentage points (Wolfers and Zitzewitz, 2006). This isn't a huge margin, but keep in mind that the Gallup presidential poles are among the most expensive, sophisticated polling operations in history, so the fact that the crowd forecasts are even in the ballpark, let alone better, is pretty significant.

The reason the Wisdom of Crowds works is because when some people forecast too high and others forecast too low, their errors cancel out and bring the average closer to the truth. The accuracy of a crowd forecast depends both on the accuracy of the individuals in the crowd and on their diversity — how likely are their errors to be in opposite directions. The great thing about it is that you can make up for low accuracy with high diversity, so even crowds in which the individual members are not that great on their own can make pretty good predictions as collectives. In fact, as long as some of the individual predictions are on both sides of the true answer, the crowd forecasts will always be closer to the truth than the average individual in the crowd. It's a mathematical fact that is true 100% of the time. Sorkin concludes his article, based on the examples of inaccurate predictions from Big Data with biased samples, by writing, "A crowd may be wise, but ultimately, the crowd is no wiser than the individuals in it." But this is exactly backwards. A more accurate statement would be, "A crowd may or may not be wise, but ultimately, it's always at least as wise as the individuals in it. Most of the time it's wiser."