It seems like we hear a new story every week: a video, or a rumor, or a song, or a commercial has "gone viral," spreading across the web like wildfire, racing to the top of the most tweeted list, and grabbing headlines in real old fashioned news media. These memes can be disgusting (like the Domino's pizza video), controversial (like the recent Kony 2012 video), and entertaining ("Friday" ?). They can be disasters for companies (see Domino's above), or marketing campaigns that reach hundreds of thousand, or even millions, of viewers for relatively little investment (1300 foot drop, the Old Spice Guy). Given the potential impact of these "memes," there is a lot of interest in what exactly determines whether or not a video, or a message, or a rumor goes viral. Here's a simple model that explains why some things do and some things don't.

Let's consider the example of a YouTube video. Suppose that on average, every person that views the video tells c of their friends about it per day (c stands for contacts), and suppose that some fraction i of the people that hear about the video actually watch it and start telling other people about it themselves (i stands for infectivity, and captures something like how interesting the video is.) Finally, suppose that on average, each person that is actively spreading word of the video does so for d days before they get bored and stop telling people about the video (d stands for duration).

To keep things simple, suppose that there are a total of N people in the population, and every one of these people is either actively spreading the video, or not actively spreading the video, but susceptible to becoming a video spreader. Let I denote the number of people currently spreading (i.e. infected) and S the number of people that are susceptible, but not currently spreading the video. So, I+S=N.

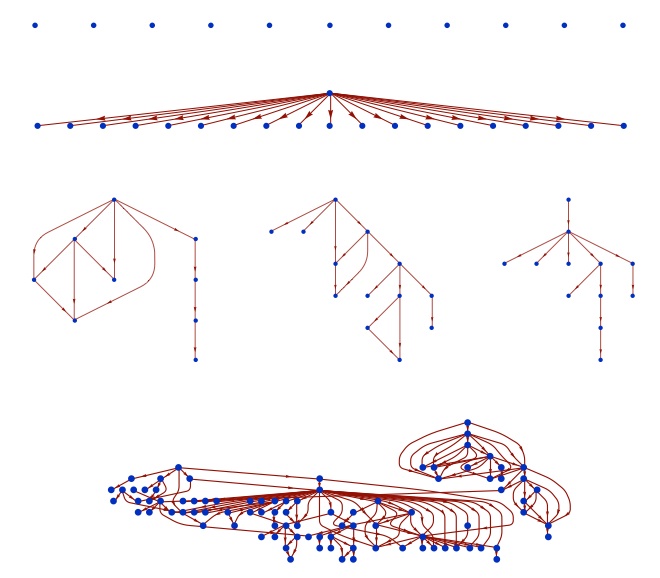

To see if the video goes viral or not, we just have to compare the rate at which people are becoming infected to the rate at which people are discontinuing sharing the video. It helps to think of a bath tub — the level of water in the bath tub represents the number of people spreading the video. The rate that water flows in through the faucet is the rate at which new people are becoming infected with the video spreading virus; the rate at which water drains out is the rate at which people are stopping spreading the video. If the rate at which water flows in is higher than the rate at which it drains out, the tub will keep filling up. On the other hand, if the drain is more open than the faucet, the bath tub will never fill up.

So, we have to figure out the rate at which new people are starting to spread the video and the rate at which people currently sharing the video are stopping. The second one is easier. If I people are currently sharing the video and each one of them shares it for d days on average, then each day we expect I/D people to stop spreading the video. For the first rate, we have I people actively sharing the video. On average, each one of them shares the video with c contacts per day, resulting in a total of cI contacts for the whole population. But, not all of these contacts results in a new person sharing the video. First, some of these people will already be sharing the video. The probability that a given person is not currently sharing the video is S/N, the fraction of "susceptible" people in the population. So, we expect cIS/N instances in which a person shares the video with someone that is currently spreading the video. Given such a contact, we said that a fraction i of these will result in a new person sharing the video. Putting it all together, the rate at which new people are becoming infected with the video sharing virus is ciIS/N.

Now we have to compare our two rates. The video will go viral if ciIS/N>I/d. Dividing both sides by I and multiplying both sides by d, this becomes, cidS/N>1. Finally, we can make life a little simpler by assuming that initially almost no one knows about the video, so the number of susceptible people S and the total population N are about the same. Then S/N is approximately 1, so the equation simplifies to just cid>1.

This simple equation tells us whether or not the video will go viral. It says if the average number of contacts, times the infectivity, times the duration is greater than one, the video will spread, otherwise it will die out. Right at cid=1 there is a tipping point; crossing this threshold causes a discontinuous jump in the future.

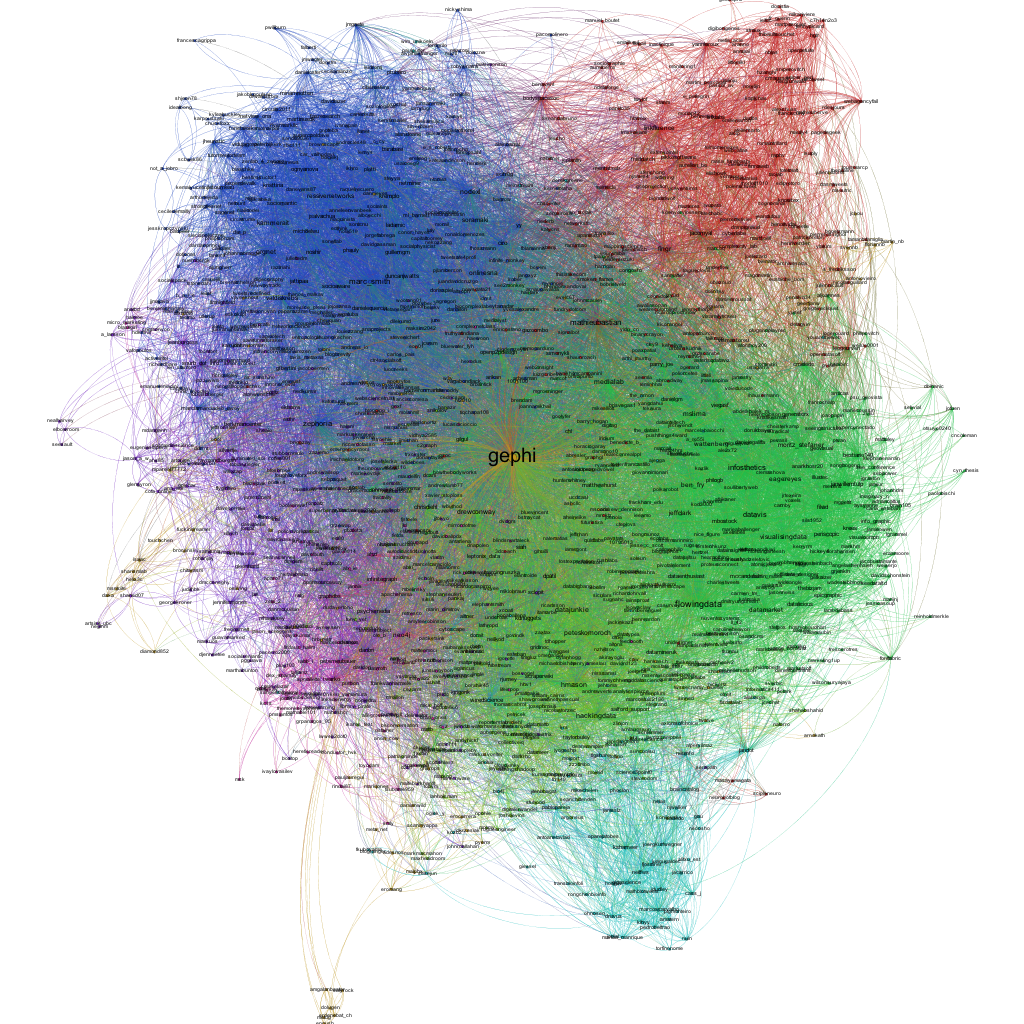

This model makes a lot of assumptions that don't really hold (big ones are that people have roughly the same # of contacts on average, and the people basically interact at random), but it gives us a basic understanding of the process. Even in more complicated models, where we make fewer simplifying assumptions, there is typically a similar tipping point, and increasing either contacts, infectivity, or duration increases the chance of crossing that threshold.

So, there you have it — everything you need to go viral: a network with enough contacts (c); a product, or message, that sounds interesting enough to be infectious (i), and with enough staying power so that people keep telling their friends about it for a long time (d).