The Wall Street Journal recently ran an interesting article about the rise of "Big Data" in business decision making. The author, Dennis Berman, makes the case for using big data by pointing out that human decision making is prone to all sorts of errors and biases (referencing Daniel Kahneman's fantastic new book, Thinking, Fast and Slow). There's anchoring, hindsight bias, availability bias, overconfidence, loss aversion, status quo bias, and the list goes on and on. Berman suggests that big data — crunching massive data sets looking for patterns and making predictions — may be the solution to overcoming these flaws in our judgement.

I agree with Berman that big data offers tremendous opportunities, and he's also right to emphasize the ever increasing speed with which we can gather and analyze all that data. But you don't need terabytes of data or a self-organizing fuzzy neural network to improve your decisions. In many cases, all you need is a simple model.

Consider this example from the classic paper, "Clinical versus Actuarial Judgement" by Dawes, Faust and Meehl (1989). Twenty-nine judges with varying ranges of experience were presented with the scores of 861 patients on the Minnesota Multiphasic Personality Inventory (MMPI), which scores patients on 11 different dimensions and is commonly used to diagnose psychopathologies. The judges were asked diagnosis the patients as either psychotic or neurotic and their answers were compared with diagnoses from more extensive examinations that occurred over a much longer period of time. On average the judges were correct 62% of the time, and the best individual judge correctly diagnosed 67% of the patients. But known of the judges performed as well as the "Goldberg Rule". The Goldberg Rule is not a fancy model based on reams of data — it's not even a simple linear regression. The rule is just the following simple formula: add three specific dimensions from the test, subtract two others and compare the result to 45. If the answer is greater than 45 the diagnosis is neurosis, if it less, then psychosis. The Goldberg Rule correctly diagnosed 70% of the patients.

It's impressive that this simple non-optimal rule beat every single individual judge, but Dawes and company didn't stop there. The judges were provided with additional training on 300 more samples in which they were given the MMPI scores and the correct diagnosis. After the training, still no single judge beat the Goldberg Rule. Finally, the judges were given not just the MMPI scores, but also the prediction of the Goldberg Rule along with statistical information on the average accuracy of the formula, and still the rule outperformed every judge. This means that the judges were more likely to override the rule based on their personal judgement when the rule was actually correct than when it was incorrect.

This is just one of many studies that have shown time and time again that simple models outperform individual judgement. In his book, "Expert Political Judgement," Phillip Tetlock examined 28,000 forecasts of political and economic outcomes by experts and concludes, “It is impossible to find any domain in which humans clearly outperformed crude extrapolation algorithms, less still sophisticated statistical ones.”

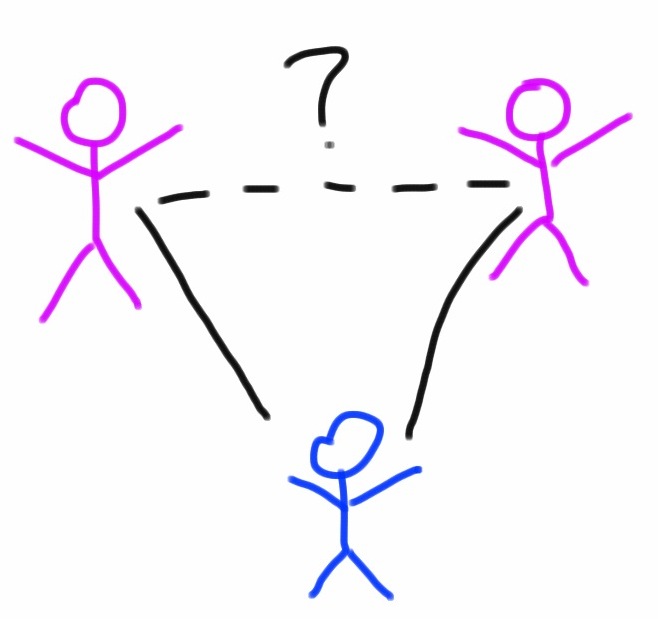

And the great thing is, you don't have to be a mathematician or statistician to benefit from the decision making advantage of models. As Robyn Dawes has shown, even the wrong model typically outperforms individual judgement. So the next time you face an important decision before you fire up the supercomputer, write down the factors that you think are the most important, assign them weights and add them up. Even something as simple as making a pro and con list and adding the pros and subtracting the cons is likely to result in a better decision. As Dawes writes, “The whole trick is to know what variables to look at and then know how to add.”